The first time I was asked to design a multi-cloud approach for a platform, I thought ‘this guy must be on drugs!’. For most people involved with public cloud computing it’s still fairly taboo to even talk about it. The reasons are fairly obvious; you spent time and effort choosing the best cloud provider for your needs, a good deal of man-hours architecting & building your platform, surely doubling up on the number of platforms is madness? Well, maybe not. Issues around vendor lock, price changes (in AWS specifically) and disaster recovery have got CEO’s and CTO’s looking at the long term future of their platforms.

Do you want to:

How One AI-Driven Media Platform Cut EBS Costs for AWS ASGs by 48%

- Spin up resources in any public cloud

- Migrate traffic from one vendor to another

- Take advantage of pricing discounts

- Have faster content delivery speeds

Then maybe the multi-cloud approach isn’t such a crazy idea after all!

How does it work

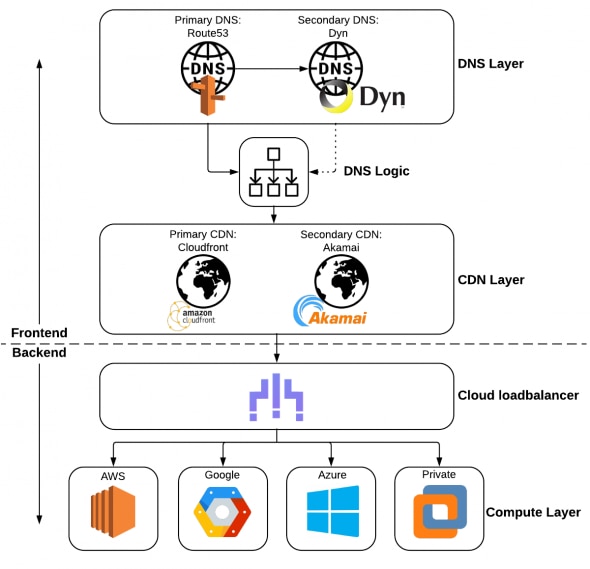

The key here is to have a redundant (or active/active) setup for the key parts of your public facing infrastructure; DNS, CDN and Compute layers. We’ll imagine for the sake of ease that the origin in our example is running a Kubernetes stack, which doesn’t use vendor specific services. In the real world you’d likely have dependencies outside of your cluster, but discussing the intricacies of public cloud vendor-lock is a blog post all of its own.

DNS

While having a 2nd DNS provider is not strictly necessary, if you’re providing a truly fault tolerant system, it needs to be there. It wasn’t that long ago that one of the world’s biggest DNS providers (Dyn) was completely knocked offline in a DDoS attack, leaving 1000’s sites (including big names like Spotify, Twitter and and PayPal) unreachable. In any case, smart DNS services can be used to route traffic to multiple Content Delivery Networks based on; a user’s geolocation, current CDN latency times, time of day, etc. Next-generation providers already have all these features baked into their products, and routing traffic based on real-time metrics is now affordable for small businesses, not just the large enterprises.

CDN

In this setup the CDN need not actually be aware at all that you’re using multiple DNS providers or multiple clouds, it’s just the middle man, accelerating content to the end user. It’s always the case that one CDN will outperform another in a given region. By utilising multiple CDN’s your customer is getting the best possible experience based on their location, mapping the right CDN vendor to the end user. There’s inevitably a bit of complication by having multiple CDN’s, mostly due to custom configurations and unique ‘edge’ functions. With a little bit of elbow grease custom configurations can usually be copied from one CDN to another in days, not weeks. Remember, you can’t have sophistication without complexity, they’re bedfellows!

Compute

Now here’s the ‘sexy’ part of the configuration. By utilising a cloud load balancer you abstract the public cloud (AWS, Azure, Google Cloud) as the end destination for a web request. The cloud load balancer provides all the features you’d expect of (say) an Amazon ELB; horizontal scaling, health checks, automatic add/remove of nodes in a cluster. But as the cloud load balancer is not vendor specific, it means traffic can be routed to anywhere you like.

What are the use cases?

Performance

As previously stated CDN latency differs from vendors in regions. To absolutely guarantee the quickest delivery of your content a multi-CDN approach has to be used. Likewise your company may have presence in multiple regions, some may be better served by AWS, others by Azure. With a multi-cloud approach you consume services that best meet your need.

Disaster recovery/high availability

If you think you’re safe because everything’s ‘in the cloud’ then you’d do well to read the story of the code guys. If you don’t know, in a nutshell, their AWS account was; hijacked, held to ransom and then maliciously deleted. They lost everything, including all their backups…they’re not a company anymore. If your responsible for disaster recovery, then enabling your workload in another public cloud is actually pretty savvy

Avoid costly vendor lock

It hasn’t happened yet, but every IT manager lives in fear that AWS will suddenly start ramping up the costs (personally I don’t think it will ever happen as it’s not their model and never will be). Price rises do happen though (Google recently added a massive price hike to use ‘Maps’ application), and it might be on the CDN level or the compute layer. Either way, if you’ve built your platform using the multi-cloud approach, then you can just switch the services you’re consuming. Typically public cloud use is charged per request or per hour, so you’re not tied into complicated exit strategies, you can just leave!

To sum up…

The multi-cloud approach is real and achievable. In my honest opinion it’s almost entirely academic at this point, as I talk to a lot of companies ‘looking’ at it or ’thinking’ about it. I myself have drawn up several proposals for customers, all of which now sit firmly in email archives, to be dusted off when they ‘revisit it, in the future’. It’s my belief that the shift to the cloud has been so painful for most companies, that the idea of adding another cloud fills them with dread. Once the dust settles, in a few more years, the multi-cloud will start to become more commonplace, but for now it remains the domain of the brave.

If you’re one of these, if you have any questions about how to effectively adopt the cloud for your business, or how to optimize your cloud performance and reduce costs, contact us today to help you out with your performance and security needs.