Bots have become a hot topic as online security has become a higher priority and, as we mentioned in a previous article, they hold almost half of all the Internet traffic. Automated and increasingly sophisticated bots have been used to accelerate and simplify online processes.

Bots are essential for the online infrastructure but can also pose a serious security threat if deployed with malicious intents. Malicious bots can participate in launching Distributed Denial of Service (DDoS) attacks or as a mean to extract valuable customer data, or even both.

How One AI-Driven Media Platform Cut EBS Costs for AWS ASGs by 48%

Because of the increased visibility and publicity around security breaches, bots get categorized as security issues and often marketing and other teams don’t give them much thought. However, after appropriately setting up initial security solutions and filtering out bad bot traffic there’s still a lot of bot traffic and “residues” left that can end up messing up your analytics data and making it harder to extract precise data-driven conclusions about visitor behavior.

For example, retail bots can hold up to 30-40% of a retail site traffic which means that marketing data ends up skewed by the bot traffic. All segmentation efforts, A/B testing, product mix analysis, or even simple metrics like bounce rate need then to be re-evaluated based off of the known bot traffic.

Tweet this: Retail bots can hold up to 30-40% of a retail site traffic

There are two main types of bad bot traffic that get picked up by your Google Analytics (GA) profiles. The ones that never actually visit your site, and those that visit and fully render your site.

The first ones are also known as “ghost bots” which are nothing more than spam nuisance much alike flyers in your mailbox, and mostly appear as referral traffic in GA.

The second type are the so-called “zombie bots” which produce analytics spam as a by-product of their various purposes. They render your website and trigger your analytics code as an after effect.

Tweet this: 2 types of bot get picked up by GA: ghost bots and zombie bots

They both skew data and contaminate website analytics which leads to wrong interpretations and subsequent bad decisions. In order to shed more light on how to remove referral spam and bot traffic from you analytic profiles we have put together some key recommendations and GA filtering tips.

Key Recommendations

When setting up filters and tackling referral spam always make sure to set up an unfiltered view before you begin, a “filter with no filters” that will hold all your traffic data including bots. This is a must-do as there is no recovery from a bad filter and nobody wants to risk losing valuable data to a typo.

Now the first thing to do is to determine how much of your website’s traffic is actually made by bots. Breaking down your website traffic and subsequently bot traffic into segments is crucial for any further steps. The next steps to take all depend upon knowing how much of the traffic are actually bots and what type of bots are visiting your site and influencing your data.

So, get to know your bot traffic and its breakdown (good, bad, neutral). There are plenty online tools available to do this at no cost, but remember that as with most free tools, you get what you pay for.

As bots can be good, bad and neutral, make sure all your internal teams are coordinated on the topic and understand the impact that bots are having on the business. It’s important to coordinate marketing, IT, sales, site operations, etc. efficiently as some bots may be related to partners, used tools or extensions and thus legitimate.

What To Avoid

There is a lot of bad advice around the web on how to address this issue. Very reputable sites often suggest server-side technical changes such as .htaccess edits but our advice is to avoid those kind of solutions as it requires a lot of technical knowledge and can easily take the wrong turn.

Also the Filter Known Bots & Spiders checkbox within Google Analytics provides some degree of bot protection but isn’t efficient against ghost and zombie bots.

Lastly, another thing that we suggest avoiding is using the Referral Exclusion under the Property to filter spam. In various occasions it has proven to be inaccurate, it often shifts the visit to a (none)/Direct visit, doesn’t provide a universal solution and doesn’t allow to check false positives with your historical data.

Eliminate Bot Data From Marketing Data and Analytics

Google has announced they’re working on a global solution but until then there are a few things you can apply by yourself but it requires a bit of technical knowledge.

First, you need to be able to tag the bots as bots so that you can work to exclude from data analysis. Next, you need to ensure your data tools can use the bot tag to perform the necessary exclusions. Google Analytics users can create the appropriate filters to ensure their data is as clean as possible.

This article, How To Filter Google Analytics Referral Spam & Bot Traffic, provides a sort of easy way to set the whole thing up.

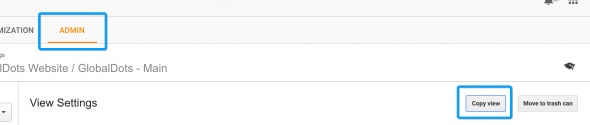

Start by accessing the Admin section in your Google Analytics account, pick settings and then Create Copy, name it – www.yourwebsite.com// Bot Exclusion View or something similar. Use this view to filter out bot traffic. At first it will have no historical data but will build up with time.

Ghost Bot Filtering

As we mentioned before for ghost bots, those are referral sessions that never happened because the bot never requested any files from your server. It sent data directly to your Google Analytics account by firing the analytics code with a random UA code. Usually it’s a way to input offline data into GA, but is easily abused.

Tweet this: Ghost bots are referral sessions that never actually happened

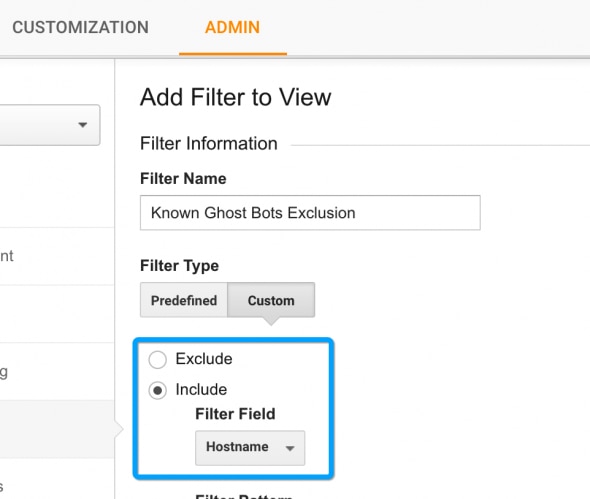

Your server cannot block or filter ghost bots as they never actually show up to your server. You also cannot filter them as they show up because they change domain name variations frequently. The solution in this case is to filter by Hostname. Here’s how to do it:

- Access the GA historic view reporting interface

- Navigate to Audience > Technology > Network

- Select Hostname as primary dimension and make sure to specify at least the last year as your date range. (Hostname is the “the full domain name of the page requested” and for most ghost bots, this parameter is hard to fake.)

- Go to historic view hostname report

- Set the date range as far back as possible. You should find legitimate visits such as translate.google.com and maybe web.archive.org. For e-commerce sites, the payment processor domain name will also be present. Everything else is probably junk, especially “(not set)” and hostnames that you easily recognize that are not serving your content

- Take a note of all the valid hostnames and create a regex to include only those. (e.g. yourwebsite.com| translate.google.com| archive.org). The new regex will capture all subdomains on the main domain and anytime someone loaded the within Google Translate or archive.org

Proceed with:

- Access the Admin section

- Pick Filters in your Bot Exclusion view

- Add a new custom filter

- Select Include Only Hostname and add the created regex into the field

- Name and save the filter

This new View will now filter ghost bots that do not set your domain name as the hostname dimension. It is not 100% bot proof but will add a major obstacle for almost all ghost bots.

Zombie Bot Filtering

Zombie bots allow some more options since they do visit and render your website, unlike ghost bots. We do not advise it as good technical knowledge is required, but you can check out server-side solutions in this tutorial. Blocking at server level adds a “cleaning” layer to analytics, and also reduces load on server resources.

Tweet this: Zombie bots visit and fully render a website as a by-product of their purposes

Now, without implementing difficult server-side measures, try these steps in order to filter zombie bots by detecting their footprint:

- Access the Network Domain report at Audience > Technology > Network Domain (This report details the ISP visitors are on when visiting the site). NOTE: Human visitors use retail ISP brands such as Comcast, Verizon, Vodafone, maybe a university or business intranet.

- Sort the report by Bounce Rate (there may be MSN, Microsoft, Amazon, Google, Level3, etc. and also some fake Network Domains like “Googlebot.com”)

- Select those that have non-existent user engagement and add them in a new regex (e.g. amazon|google|msn|microsoft|automattic)

The next footprint to apply is in the Browser & OS report:

- Go at Audience > Technology > Browser & OS. Here you’ll find visits from Mozilla Compatible Agent. These are likely bots.

- Repeat the regex procedure

These two footprints usually capture the vast majority of zombie bots.

Before adding them as a filter, try to identify zombie bots that may be hitting your site specifically, as follows:

- Go to Acquisition > All Traffic > Source/Medium

- Look at each medium in turn

- Add a secondary dimension and cycle through the dimensions under Users and Traffic. A dimension (e.g. Internet Explorer 7) with engagement metrics might be indicative of a bot.

- Look for more footprints

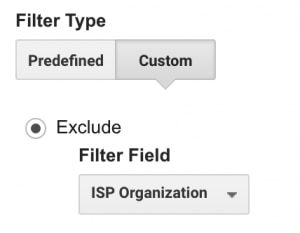

After detecting zombie bot footprints, head back to apply the filters:

- Go to Admin section and Filters in Bot Exclusion view

- Repeat the same steps as for ghost bots, but instead of Hostname, create two filters to exclude the Network Domain (ISP Organisation) regex and the Browser/OS regex respectively

If you detect more zombie bots just create a new filter based on those findings. Be sure to apply the Verify Data feature to check your filters.

Advanced Segment Filtering

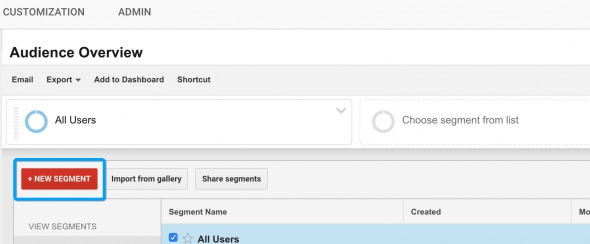

At this point, the new view will filter almost all bot traffic. It may require occasional amending and auditing, but mainly the setup will run on its own. At some point you might need to check historical traffic in your original view which will require an Advanced Segment that will replicate the filters applied earlier.

To setup the Advanced Segment follow these steps:

- Access the Reporting dashboard of the original view with historical data

- Add a Segment

- Pick to add a New Segment

- Name it (e.g. “Known Bot Filter”)

- Go to Advanced > Conditions

- Add the filters from the new bot view (be sure to note Include/Exclude)

- Save

After doing so the Advanced Segment will be at your disposal to apply on any report. It will even allow you to automatically filter out bots for a selected date range.

To Sum Up

Bots have been around the online landscape for a while, but their impact on marketing and generally on business is changing. Until IT giants launch a global solution, creating filters that remove bot traffic will be your best pick. Also, these articles can give deeper insights on the matter: How to Filter Out Fake Referrals and Other Google Analytics Spam and Geek guide to removing referrer spam in Google Analytics.

Tweet this: Bots aren’t new to the online landscape but their impact on business is changing.

Here’s a quick overview of the key checkpoints for filtering Analytic referral and bot spam:

- Identify the level of ghost and zombie bots on your site

- Create a new view for filtering known bots

- Add filters for ghost bots (Hostname) and zombie bots (Network Domain & Browser)

- In your historical view, create an advanced segment with the same filters so you can filter historical traffic

- Commit your analytics to regular auditing and always be skeptical of traffic data

Following all these steps can be quite stressful, so if you find these steps to be above your technical knowledge a good alternative is to contact a solution vendor and trial their bot management products.

It can be tough to pick the best online path forward for your company but if you seek excellence in eliminating analytics spam without risking your unfiltered data, filtering false positives or creating unsustainable server changes, GlobalDots experts are always here to help. Feel free to contact our experts at GlobalDots as they can help you boost your web assets performances.