Data is the cornerstone of all online marketing and business decisions. Proper understanding of visitors and market trends, behaviour and industry shifts – it all comes down to data driven insights. And those insights can only be as good as your data.

In order to gather data we use a variety of monitoring and analytical tools, and one such widely used is definitely Google Analytics (GA). It helps gather intel about traffic, users, channels and behaviour, making it the source of all the main website metrics. But if you’ve used it, you certainly noticed some data simply doesn’t add up, and you rightfully doubt the legitimacy of it.

Book a demo today to see GlobalDots is action.

Optimize cloud costs, control spend, and automate for deeper insights and efficiency.

The most frequent source of inaccurate data picked up by your GA is bot traffic. This should come as no surprise considering that over half of the Internet’s traffic is made by bots, according to a report by Imperva Incapsula. Bot traffic that gets picked up by analytical tools can end up skewing your data reports, which can lead to wrong assumptions and conclusions, impact your site performance and ultimately even harm your business. In this article we’ll cover the basics of bot traffic, how to detect it and how to eliminate it.

Tweet this: Data-driven decisions can only be as good as the data itself

About Bots

Considering that for every human hit there’s a bot hit to a server, it is safe to say that bots are an essential component of the internet infrastructure. One one side we have “good bots” which are used by organisations to gather information and perform automated tasks (such as search engine bots that crawl and index your site), while on the other side there are “bad bots” deployed by cyber-criminals whose sole intent is to steal data or participate in botnets for launching powerful DDoS attacks (such as the Dyn one).

According to Imperva Incapsula, 22.9% of all traffic is made by good bots while 28.9% are malicious. Good bots, such as search engine crawlers, respect your robots.txt file instructions and are excluded from GA reports by default. Bad bots, on the other hand, visit your site with all kind of intentions like spamming, content scraping or malware distribution. Advanced bots often do a great job at imitating human behaviour making it very difficult to separate them from regular human visitors. Vast media coverage of security breaches has further pushed the online community to rethink their security solutions. Many of those solutions offer a high level of bot protection, filtering out most bot traffic.

Being treated mainly as a security issue, bots are often an afterthought for marketers. However, even if most of the bot traffic gets eliminated, there are still residues left that your GA easily picks up and treats as legitimate visits. These residues can end up messing up your analytical data. When talking about bad bots that get picked up by GA, there are two main types:

- Ghost bots – The ones that never actually visit your site. This are nothing more than spam nuisance and show under referral traffic in GA.

- Zombie bots – Fully render your site. They produce analytics spam by triggering your analytics code as an after effect of their activity.

How To Detect Bot Traffic

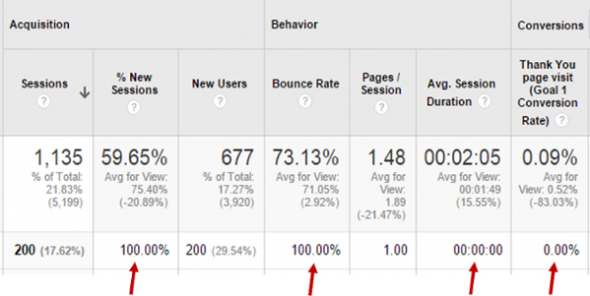

In the past you may have heard that bots won’t show in your GA data because they don’t trigger Javascript. Unfortunately, today that’s not true. Also, the Filter Known Bots & Spiders checkbox within GA provides some degree of protection but isn’t fully efficient against ghost and zombie bots. Some early indications of bot traffic in your analytical reports can be:

- Low average session duration

- High bounce rates

- No goal completion

- High values for new visitors

If you check your GA dashboard regularly you could encounter a sudden burst in traffic on some occasions. If there were no special occasions, campaigns or social events that could justify the sudden increase in traffic then it is likely due to bot traffic. In that case make sure to check some of the following GA reports for those unexpected spikes in traffic:

- New vs. Returning Users – By comparing new and repeated sessions you can easily track bot traffic as bots get picked as new users but provide low levels of website engagement. (Path: GA – Audience – Behavior – New vs Returning User)

- Browser & OS – After tracking a specific browser that achieved a high number of sessions, you can narrow down this report to find the version responsible for the sudden burst of traffic. Cross-check it with bounce rates and session durations to verify the assumption of bot correlation. (Path: GA – Audience – Technology – Browser & OS)

- Network Domain – The “Network” report provides a list of ISPs from which you traffic is generated, such as Google, Verizon or Amazon. By adding a secondary dimension “network domain” you can narrow down traffic by domain. Usually the most common generator of bot traffic is “amazonaws.com”. Once you detect the domain that generated bot traffic you can exclude that traffic by applying a custom filter. (Path: GA – Audience – Technology – Network)

How to Exclude Bot Traffic

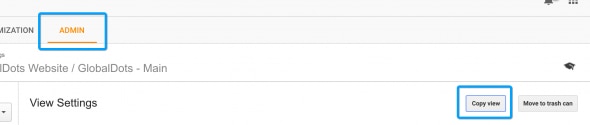

Google has announced a global solution for filtering bots and referral spam in GA but until it gets released here are a few tricks you can implement by yourself. Before you start applying any filters, make sure to set up an unfiltered view – a “filter with no filters” – that will encompass all your website traffic data, including bots. Don’t skip this step, nobody wants to lose data to a typo.

As a first step, you should get to know your bot traffic. As bots can be good, bad and neutral, make sure all your internal teams are coordinated on the topic. Align marketing, IT, sales, site operations, etc. efficiently as some bots may be related to partners, used tools or extensions and thus legitimate.

Next is a recap of the procedures needed to successfully exclude bot data. For an in depth step-by-step guide, make sure to check our previous article on the topic of filtering bot traffic.

To exclude bot data, you need to label bots as bots. Next, you need to create appropriate filters in GA to apply them to your data in order to keep it as clean as possible. Start by going to the Admin section in GA, then Settings and then Create Copy. Name it – www.yourwebsite.com// Bot Exclusion View or similar. You will then use this new view to filter out bot traffic. At first it will appear empty but will build up with time.

After previously detecting your bot sources, you can now proceed to setting up filters that will eliminate invalid data from your reports. For ghost bots, you will need to setup a filter by Hostname and take note of all the valid hostnames. After that create a regex that will hold only those. The new regex will also capture all subdomains on the main domain. Now head back to your Bot Exclusion view to add a new custom filter. Select Include Only Hostname and add the created regex into the field.

Now, with zombie bots, it’s a bit more complicated. You’ll have to filter zombie bots by detecting their footprints first. Use the reports where you previously detected bot traffic and add those suspicious sources to a new regex. Proceed to detect other bot footprints and repeat the regex procedure. After detecting zombie bot footprints, head back to Admin section – Filters in Bot Exclusion view to apply the filters. Do the same steps as for ghost bots, but instead of Hostname, create filters to exclude each regex data. You can see a step-by-step guide for setting up filters here.

Advanced Filtering

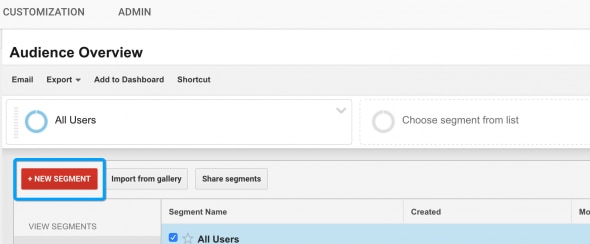

Now the new view will filter almost all bot traffic. At some point, however, you will probably need to verify historical traffic in your original view. This will require you to set up an Advanced Segment that replicates the filters applied earlier. You’ll need to Add a Segment in the Reporting dashboard of your original view. Name it something like “Bot Filter” and add all the new filters in the Advanced – Conditions section (keep in mind the Include/Exclude setting).

Tweet this GA Advanced Segment Filter – All your bot filters in one place to use anytime

By doing so you will have and Advanced Segment filter at your disposal. This will contain all your bot filters and you will be able to apply it on any report and even for any selected date range. Also there are lots of other advanced techniques that can be implemented. But if you don’t have adequate technical skills, we strongly suggest to avoid these:

- Server-side technical changes such as .htaccess edits – requires technical knowledge and can easily take the wrong turn.

- Referral Exclusion under Property – it has proven to be inaccurate, it often shifts the visit to a (none)/Direct visit, doesn’t provide a universal solution and doesn’t allow to check false positives with your historical data.

Conclusion

As said, bots make more than half of all Internet traffic and are a force to be reckoned. With the advance of technology, bot sophistication is rising and their impact on businesses is increasing. Until a global solution gets put in place, creating GA filters will bill your safest pick. Worth mentioning is the fact that eliminating bot traffic from your GA reports simply hides them from your data but they do still hit your servers, and may consume resources as well as impact the performance of your web assets. Stopping bot traffic altogether requires high expertise and adequate tools. Also, all the above described steps can be stressful and time-consuming so at a certain point you might want to consider contacting experts on the matter. If you seek excellence in eliminating analytics spam without risking your unfiltered data, filtering false positives or creating unsustainable server changes, our experts are always here to help. Feel free to contact our experts at GlobalDots as they can help you pick security and performance solutions that best suit your needs.