Speeding up your page load time, which is crucial for an overall performance, conversion rate, brand upgrade and more, can be done both by speeding up your browser side technologies or by using server acceleration technologies.

First, you need a tool to find out which component is slowing you down exactly, and to what extent. One of them could be Page Speed Online tool developed by Google (https://developers.google.com/speed/pagespeed/insights).

How One AI-Driven Media Platform Cut EBS Costs for AWS ASGs by 48%

Alongside the tool, come analysis and advice on performance best practices, Google’s Web Performance Best Practices (https://developers.google.com/speed/docs/best-practices/rules_intro).

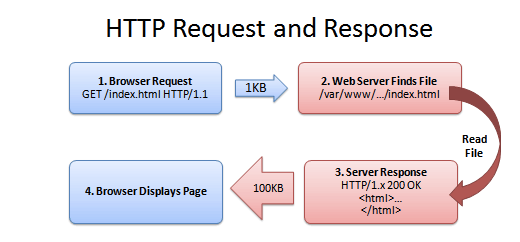

Page Speed evaluates how well your pages eliminate steps involved in page load time such as transmitting HTTP requests, downloading resources, parsing and executing scripts, resolving DNS names and so on. This is useful for both shortening the time each step takes to complete, and comparing your web page performance to a number of different – rules. The rules are general front-end best practices you can apply at any stage of web development, and come with a documentation. Google’s Web Performance Best Practices also provide advice on how to tune your site for the characteristics of mobile networks and mobile devices (which are becoming increasingly popular, but taking more time to load webpages than desktop browsers).

The rules fall into six categories, first of which is – optimizing caching.

Optimizing Caching

The browser can save or store a copy of images, stylesheets, javascript or the entire page, and in this way when you need it again later, the browser doesn’t have to download it again. The round-trip time is significantly reduced by eliminating numerous HTTP requests for the required resources, and you substantially reduce the total payload size of the responses. This is – caching. Better caching means fewer downloads, and immediately, a faster page load time.

Caching can be fixed for the files that tend to change, and in cases when browser isn’t sure whether a cached file can be used or not: by adding a time label to a file (last-modified), for example, or by adding it an identifier such as ETag, or an expiry rule, and more.

Google best practices advise on enabling caching by:

- leveraging browser caching: setting an expiry date or a maximum age in the HTTP headers for static resources instructs the browser to load previously downloaded resources from local disk rather than over the network

- leveraging proxy caching: enabling public caching in the HTTP headers for static resources allows the browser to download resources from a nearby proxy server rather than from a remote origin server

Browser Caching and Proxy Caching

The browser cache works according to fairly simple rules, i.e. it will check whether the representations are fresh, usually once a session, once in the current invocation of the browser (for example: while pressing the “back” button). Proxy caches work on the same principle, but on a much larger scale, i.e. proxy caches aren’t part of the client or the origin server, but instead are out on the network, and requests have to be routed to them somehow. Proxy caching can result in a significant reduction in network latency by making the static resource available to all users whose requests go through that same proxy, once it has been requested from a single user, i.e. a single proxy.

Recommendations, among others, for improving browser caching are:

Setting caching headers for all static resources

Google’s best practices recommend setting an expiry to a minimum of one mont, and preferably up to one year in the future. Also, to set the Last-Modified date to the last time the resource was changed. If the Last-Modified date is sufficiently far enough in the past, chances are the browser won’t refetch it.

Using fingerprinting

Fingerprinting is embedding a “fingerprint” of the resource in its URL (i.e. the file path). When the resource changes, so does its fingerprint, and in turn, so does its URL. As soon as the URL changes, the browser is forced to re-fetch the resource.

Also, setting the vary header correctly for Internet Explorer, avoiding Firefox cache collisions, and using the cache control:public header for some versions of Firefox. Examples on this an detailed explanations can be found on the official webpage (https://developers.google.com/speed/docs/best-practices/caching).

In a same line, rules for improving proxy caching include:

- Don’t include a query string in the URL for static resources

- Don’t enable proxy caching for resources that set cookies

- Be aware of issues with proxy caching of JS and CSS files

Details for this can be found on the same page. If well enabled, proxy caching can gain you free web site hosting, since in that case, responses served from proxy cache won’t draw on your servers’ bandwidth at all.

Overall, improved caching will reduce latency and network traffic by satisfying requests from the cache, i.e. it will use web space to save you time. Bandwith requirements will be lower and more manageable, and a webpage more responsive. Web caches rules are set in the protocols, and can be finely tuned (in the above examples) in how they will treat your site. And the results are worth the trouble.

Read more:

- https://developers.google.com/speed/docs/best-practices/caching

- http://betterexplained.com/articles/how-to-optimize-your-site-with-http-caching/

About GlobalDots

With over 10 years of experience, GlobalDots have an unparallel knowledge of today’s leading web technologies. Our team know exactly what a business needs to do to succeed in providing the best online presence for their customers. We can analyse your needs and challenges to provide you with a bespoke recommendation about which services you can benefit from.

GlobalDots can help you with the following technologies: Content Delivery Networks, DDoS Protection, Multi CDN, Cloud performance optimization and infrastructure monitoring.