27th January, 2017

6 Min read

There was a lot of discussion about DNS security issues in the last few months. It’s largely due to the massive DNS based DDoS attack that shook the US in October, but also because of Oracle’s recent acquisition of Dyn, the biggest worldwide DNS service provider. Taking in consideration the way the DDoS attack was executed as well as the magnitude of it, many organisations started reviewing their options and rethinking their overall DNS strategies. Multi-DNS quickly became one of the best solutions to mitigate such threats.

In this article we’ll break down the origins of DNS services with a brief historical overview, the way they operate as well as the reasons to why multi-DNS is becoming increasingly popular.

How One AI-Driven Media Platform Cut EBS Costs for AWS ASGs by 48%

How does DNS Work?

Domain Name System (DNS) servers are often called “the phonebooks of the Internet” and are used to resolve human-readable hostnames into machine-readable IP addresses. They also provide other useful info about domain names, such as mail services. As when you know someone’s name but don’t know their phone number, you use phonebooks (well, not as much as in the past) to find their number. DNS services use the same logic. When you request data for a web location you type its name and then the DNS servers find their IP address.

For example, if you request an address such as globaldots.com, DNS will translate it to something like 198.102.746.4. These internet phonebooks greatly influence the accessibility of Web locations which is exactly why DNS is crucial for any organization that relies on the Internet to connect to customers, partners, suppliers and employees.

The Evolution of DNS

But if we go 20 years back, the situation was a bit different. Back then it was common for everyone to run their own “authoritative servers” in DNS that would serve out their DNS records. You had to publish “name server” records for each domain name that list which DNS servers are “authoritative” for your domain. These then became the servers which can answer back with the DNS records that people need to reach you web assets. It was important to have at least one authoritative server which would distribute DNS records.

The main issue in those early days was that if that server had problems or went offline for some reason, people would be unable to access your web location because of missing DNS records. It’s why the best practice at the time was to enable a “secondary” DNS server that contained a copy of your domain’s DNS records, and possibly at different geographies and on another network.

All the way back in 1997, the Internet Engineering Task Force (IETF) published RFC 2182 where they suggested organisations to

”find an organisation of similar size, and agree to swap secondary zones – each organization would agree to offer a server to act as a secondary server for the other”.

It was common practice to have 2, 3, 4 or more authoritative servers. One was the master server where all the changes were made while the others acted as “secondary” servers copying DNS records from the master server. Even though it enabled a great geographic and network resilience which would ensure the availability of DNS records, this practice grew unsustainable over the years. It simply took too much time, knowledge and resources to develop a proprietary DNS infrastructure. Companies needed someone who would take care of these processes for them.

DNS Hosting Providers

It soon became too expensive and complicated for site owners to build and run their own DNS infrastructure. To address these pain points, specialised companies started to arise. They are the DNS hosting providers – one had just to sign up and delegate DNS operations while they took care of everything else. The benefits they provided were (and still are) huge. Instead of organisations building and running their own DNS network and having a couple of servers, DNS providers gave access to tens or even thousand of DNS servers with none of the complicated processes required.

With technologies as anycast in place, DNS providers took care of data center procedures, geographic and network diversity, while providing capabilities on a global scale. Something that a very limited number of companies could provide by themselves. Beside best practices, they offer much more:

- Performance – Network latency experienced by geographically dispersed users gets easily dealt with

- Security – Vulnerability to spoofing and distributed denial of service (DDoS) attacks substantially decreased

- Reliability – Internet domain queries are consistently and correctly resolved

- Availability – Web location is reachable for users at any given time

- Scalability – As an organization’s business grows, its traffic increase is managed with ease

Everything worked flawlessly, DNS providers offered great services, evolved to the Cloud and worked on expanding their network. With all the necessary DNS diversity, performance and protection in place, only one DNS provider was more than enough for each organisation. Until recently.

Tweet this: Until recently, one DNS provider was more than enough

Multi-DNS: Why Multiple DNS Providers?

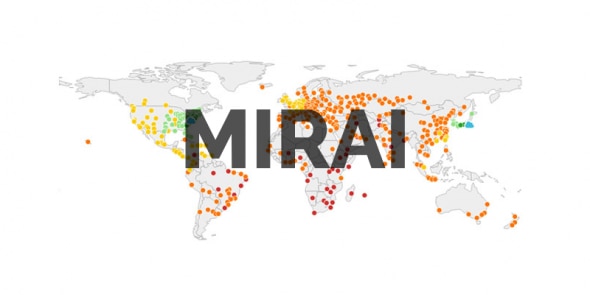

When Dyn, the biggest DNS Hosting Provider came under attack (the most severe attack ever executed), it brought down Netflix, GitHub, Reddit, Twitter, Airbnb, Amazon and many other. The fall of online giants attracted the media spotlight. All of their services resided on Dyn’s DNS infrastructure. And when Dyn went down, a huge chunk of their network collapsed too. Millions of people were unable to access the servers running those services, for hours.

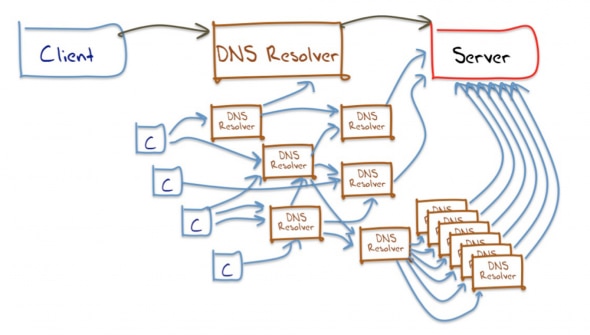

Even with all the best technology, procedures and infrastructure in place, the huge amount of traffic generated by the Mirai botnet simply overwhelmed Dyn’s capabilities. It was a single point of failure.

Tweet this: Mirai botnet overwhelmed Dyn’s capabilities. It was a single point of failure.

At first glance, a simple remedy is to look for multiple DNS providers for hosting DNS records to ensure a failover infrastructure in case of a similar outage. So now you may be asking yourself, but why everyone isn’t already using multiple DNS providers? Unfortunately, it’s not as simple as it looks.

Each of these providers already has a globally established DNS architecture that tops anything that any non-specialised company could have built in the past. An infrastructure of that scale, with dozens of POPs (points-of-presence) and anycast, easily dealt with all the possible threats and was more than sufficient up until the Mirai IoT based DDoS, which took the cybersecurity and performance community by storm.

Now, even if it sounds logical in theory, dealing with multiple DNS providers is costly and complicated in most cases. With Content Delivery Networks and Global Load Balancers requiring adjustments, different DNS features, additional IT complexity and substantial costs, opting for multi-DNS providers is a hard decision for most sites.

To Sum Up

Efficiency and high performance over vast geographies is not possible without the appropriate DNS infrastructure. What was until recently unthinkable, DNS services are now vulnerable and require a new approach.

New and evolved strains of malware are forcing organisations to rethink their DNS strategy and related security options. A multi-DNS approach solves the performance and security issues but comes at a hefty price and adds another layer of IT complexity. In the long run, the best option is to educate and set a security standard for all connected devices to reduce risks of botnets. For now, multi DNS is by far the safest solution, and if you’re considering it make sure to consult a specialist.

For that matter, feel free to talk to our experts here at GlobalDots. We can help you with DNS, multi-DNS and everything else performance and security-related.