Businesses across the globe are increasingly relying on big data to guide their decision-making process, and help everyday operations.

On the other hand, lack of data visibility and accuracy rank among main concerns among enterprises using the cloud. This makes data management an imperative for leading enterprises.

How One AI-Driven Media Platform Cut EBS Costs for AWS ASGs by 48%

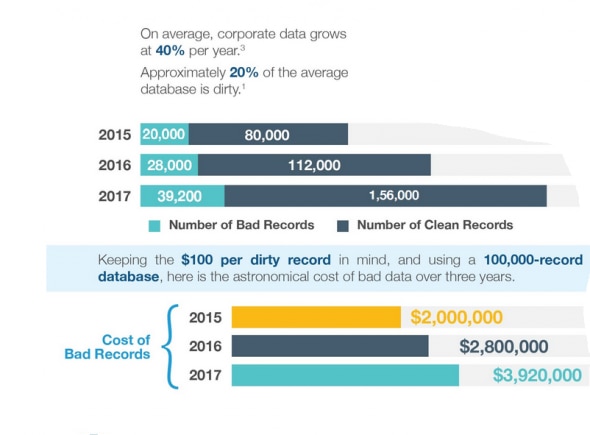

The figure of data inaccuracies costing businesses $15 million per year was reported by Gartner in its 2017 Data Quality Market Survey. The author of the report noted that, while poor data quality was hitting some businesses where it hurts, businesses that had taken steps to make sure their cloud data is accurate benefitted from being able to trade data as a valuable asset to generate revenues.

In this article we discuss how enterprises can achieve data accuracy and visibility, to make informed business decisions and thrive in the cloud.

Data visibility

A recent survey from Dimensional Research, which is based on responses from 338 IT professionals, found that fewer than 20% of IT professionals believe they have complete, timely access to data packets in public clouds. In private clouds, the situation is somewhat better, with 55% reporting adequate visibility. Unsurprisingly, on-premises data centers had the most positive response when it came to data visibility.

The survey authors said the results show companies have low visibility into their public cloud environments, and the tools and data supplied by cloud providers are insufficient. They identified some of the challenges such lack of visibility can cause, including the inability to track or diagnose application performance issues and monitor and deliver against service-level agreements, as well as delays in detecting and resolving security vulnerabilities and exploits.

Some of the findings regarding cloud visibility from the survey:

- 87% of respondents expressed fears that a lack of cloud visibility is obscuring security threats to their organization.

- 95% of respondents said visibility problems had led them to experience an application or network performance issue.

- 38% cited insufficient visibility as a key factor in application outages and 31% in network outages.

As organizations engage in digital transformation efforts and continue to turn to cloud infrastructure, organizations can’t afford to lose visibility. This is crucial for all of the business benefits of cloud traffic visibility the survey respondents cited, such as attaining superior application performance, meeting SLAs and shrinking the time it takes to detect and remedy security relation issues.

Data accuracy (quality)

Before you begin to get a handle on the data itself, it’s important to understand what data quality refers to. According to Gartner data quality is examined by several different points, including:

- Existence (does the organization have the data to begin with?)

- Validity (are the values acceptable?)

- Consistency (when the same piece of data is stored in different locations, do they have the same values?)

- Integrity (how accurate the relationships between data elements and data sets are)

- Accuracy (whether the data accurately describes the properties of the object it is meant to model)

- Relevance (whether or not the data is appropriate to support the objective)

Data availability and security are critically important, but the accuracy of the data, as well as how easily it can be assessed and consumed, play as much if not more of a role in maintaining a data-driven enterprise. Data that isn’t accurate provides little value to businesses, but at the same time, ensuring this accuracy can’t come at the expense of operations—even as operating environments and architectures continue to evolve and become more complex.

Data management challenges inevitably arise in terms of:

- Storing and utilizing accumulating volumes of data without crushing systems

- Keeping databases running optimally to ensure applications perform productively and remain available

- Complying with stricter regulatory mandates, forcing modern security practices and access control measures

Some data management experts argue that 100% data quality in the cloud is unattainable – especially when data has been migrated from an on-premises database in different or incompatible formats. It’s also the case data can degrade over time due to human error, a failure to keep it up-to-date, and software bugs.

Still, even if you can’t achieve 100% data quality, you should focus on achieving as much as you can.

How to achieve cloud data accuracy and visibility

With data sets dispersed in many different locations (both on-premises and in the cloud), the key to data quality is total visibility – these two aspects are intertwined. With total visibility across your IT infrastructure, it’s easier to identify data quality.

Assuming the data collection process is effective, these steps include implementing access controls, audit trails, and logs to ensure the integrity of data. Naturally these measures require monitoring to check data isn’t being accessed, amended, or deleted without authorization, but there are automation solutions that can do this for you and alert you to events that could result in data being compromised.

Where data lacks completeness, consistency, uniqueness, and validity, automation solutions with cloud management capabilities can collect data sets from different data stores (on premises, single cloud, and multi-cloud) and consolidate data in one place. It can also check for non-compliant data or data that has been excluded from a data set due to having an incompatible format.

Another important things to consider is data synchronization. Most sites already have data synchronization policies and update procedures for synchronizing their mission-critical transactional data, but they haven’t necessarily addressed big data.

There are an immense number of data sources and extreme velocity of data delivery with big data. Nevertheless, timestamps on data, and also information on the timezones the data is coming in from, need to be synced in order to know where the freshest data is. There are also the realities of the data update process that have to be faced. Not all data can be updated in real time, so decisions have to be made on when the data is synced with master data, and whether any batch data synchronizations occur nightly, or in scheduled batch “burst” modes throughout the day. These processes should be documented in IT operations guides—and they should be updated every time you add a new big data information source to your processing.

Conclusion

Many businesses fail to tackle the problem of poor data quality and limited data visibility because it’s considered too complex, costly, and time-consuming. However, with total visibility of your IT infrastructure and automation, you should be able to make sure your cloud data is accurate and can be relied upon to help your business thrive in the cloud.

If you have any questions about how we can help you optimize your cloud costs and performance, contact us today to help you out with your performance and security needs.