Web performance optimization means equipping your website with whatever gets it to load more speedily. Ideal time is 2 seconds, but many top brands keep losing customers, or customer interest due to bad performance, frequent downtimes, and overall lack of understanding the best practices.

However, many experts in the field have turned web performance optimization into science, or even art – while building tools, analyzing performance data, and sharing valuable insights.

How One AI-Driven Media Platform Cut EBS Costs for AWS ASGs by 48%

Impatient Customer

There is a simple rule to web performance – faster website. But ‘fast’ isn’t what ‘fast’ used to be. 250 millisecond delay can lead your user to a competitor. 1 out of 4 customers expect websites to load in under 4 seconds. Most will abandon (to never visit again) your website in 6-10 seconds. PhoCusWright and Akamai (2010) cite a three second rule – 57% of online shoppers will wait three seconds or less before abandoning the site.

The internet user doesn’t wait, and this can result in losing massive revenue, page ranking, conversion rate, page views and downloads.

The Web Page Growth

The average webpage has doubled in size since 2010. The need to standardize performance optimization rules hasn’t even appeared until late 90s. According to Web Performance Today results:

- In November 2010, the average top 1,000 site had a total payload of 626 KB. By May 2013, this number almost doubled to 1246 KB.

- Much, though not all, of this growth is due to the proliferation of images. In 2010, images accounted for 372 KB of the average page. By 2013, that number grew to 654 KB.

Add to this the increased usage of mobile devices, proliferation of video, responsive web design attempts – and suddenly, you have a whole list of routines to re-check to achieve this simple goal of a – faster website.

Essential Rules

Steve Souders is considered an establisher of web performance optimization, and the coiner of the term in 2004. He is the Head Performance Engineer at Google where he works on web performance and open source initiatives. His book, High Performance Web Sites, was #1 in Amazon’s Computer and Internet bestsellers.

The key rules, which are explained in the book, are valued as the original performance rules:

- Rule 1 – Make Fewer HTTP Requests

- Rule 2 – Use a Content Delivery Network

- Rule 3 – Add an Expires Header

- Rule 4 – Gzip Components

- Rule 5 – Put Stylesheets at the Top

- Rule 6 – Put Scripts at the Bottom

- Rule 7 – Avoid CSS Expressions

- Rule 8 – Make JavaScript and CSS External

- Rule 9 – Reduce DNS Lookups

- Rule 10 – Minify JavaScript

- Rule 11 – Avoid Redirects

- Rule 12 – Remove Duplicate Scripts

- Rule 13 – Configure ETags

- Rule 14 – Make AJAX Cacheable

Souders’ follow-up book, Even Faster Web Sites, provides performance tips for today’s Web 2.0 applications.

The golden performance rule, according to Sauders, is that 80-90% of the end-user response time is spent on the front-end which has the greatest potential for improvement, and needs to get simpler. Souders also claims that the user perception is more relevant than actual unload-to-unload response time.

Tools

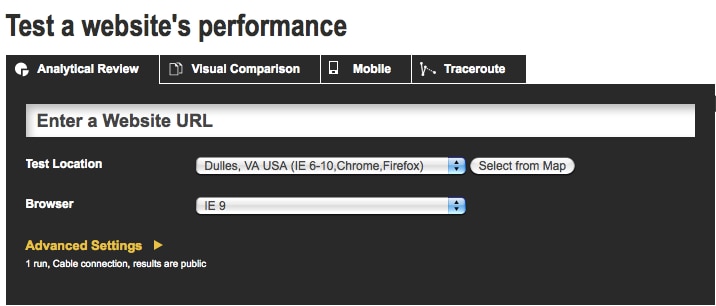

There are many tools that can detect the root cause of your website being not fast enough, such as YSlow, Pingdom, WebPageTest, Cuzillion, Wireshark, HTTP Archive, PageSpeed and so on.

They basically let you run a test, from multiple locations around the globe, from different browsers and get back diagnosis charts and suggestions for improvements. Most of them are free, but some include advanced options and require extra payment.

The test results help you in narrowing down the list of problems which are causing delays, whether those be too many HTTP requests, slow caching, image formats, hosting service, junk lines in your code, DNS name resolving etc.

Web Diet

Performance optimization is not only essential for your business, that is, not only that your whole revenue depends on it, as well as the customer satisfaction, but less data also needs to travel on the web to keep the network less clogged.

Chris Heilmann, a Mozilla evangelist, builds on the idea for the Smashing Magazine, on burning the excessive code, and making leaner and more future-proof websites. “The Web as it is now is suffering from an obesity problem,” Heilmann claims. Reasons for “an obese web” as he perceives them, are:

- we don’t develop in realistic environments

- there is a false sense of allegiance to outdated and old technology, namely browsers of the ’90s

- browser differences (API performances)

He suggests a vanilla web diet to slim down the products we build and describes the principles as building on what works, using a mix of technologies, asking questions from environment, load only what’s needed and so on. You can read more from the full manifesto.

Crash Course

Finally, we recommend the crash course video on web performance with Ilya Grigorik, a web performance engineer and developer advocate on the Make The Web Fast team at Google.

“I could give you one rule per minute, but it doesn’t help if you don’t understand the problem,” Grigorik explains.

Video Link: http://parleys.com/play/5148922b0364bc17fc56ca0c/chapter3/about

Sources/Further Reading:

- Companion web site for the book High Performance Web Sites by Steve Souders.

- Web Performance Today

- Akamai and PhoCusWright Press Release

- Vanilla Web Diet